RL Model Development & Pre-Training

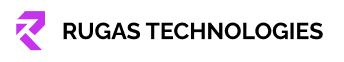

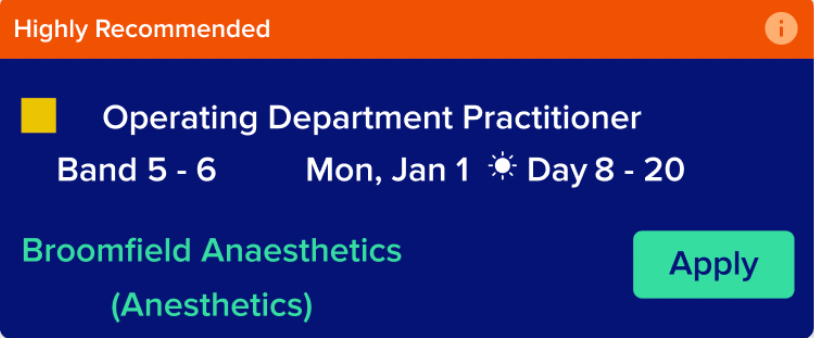

Designed reinforcement learning architecture with reward functions capturing shift acceptance, user satisfaction, and operational efficiency metrics.

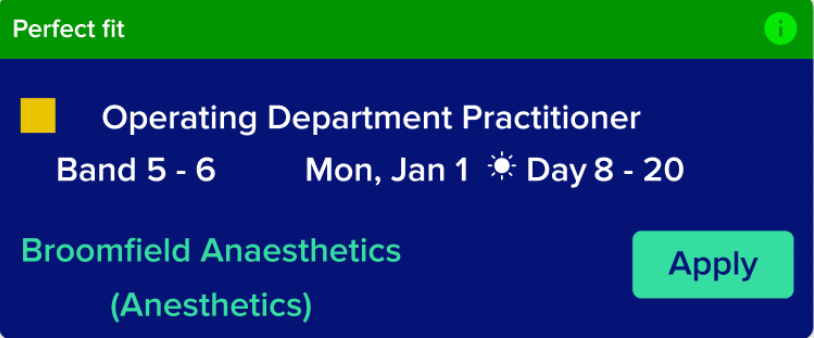

Pre-trained base model on historical shift assignment and acceptance data establishing foundational understanding of user preferences and shift characteristics.